The Rubber Revolution: Tyre Choice Modeling

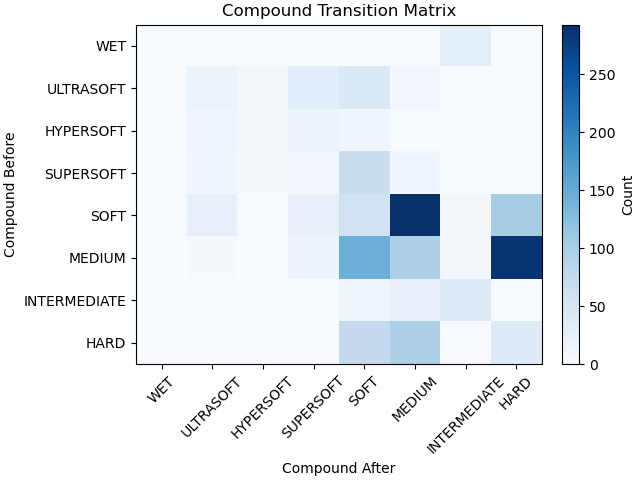

Data Pre-processing

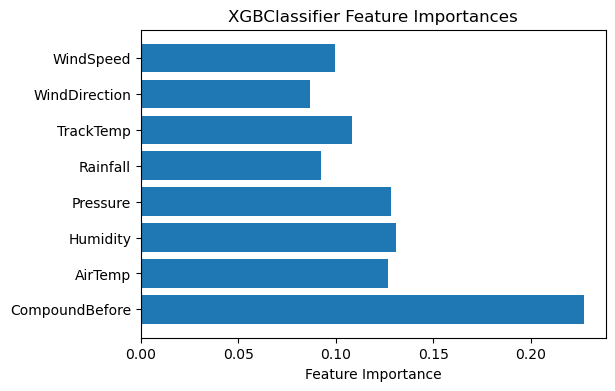

The data was downloaded via the FastF1 database. The main preprocessing technique in the current work is data reduction via feature selection. For regression analysis of predicting lap time improvement by switching tires, the chosen features are reduced to Air Temperature, Track Temperature, Humidity, Pressure, Rainfall (T/F), Wind Direction, and Wind Speed. The chosen model, XGBoost, is generally pretty resilient to poorly transformed data since it is tree based, but there is certainly room for future work to help aid the convergence of these models.

ML Algorithms / Models Implemented

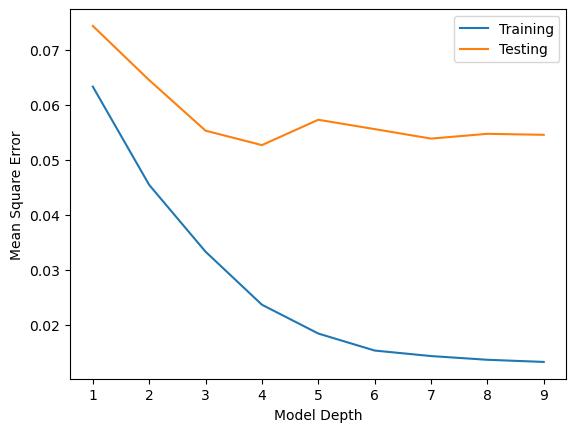

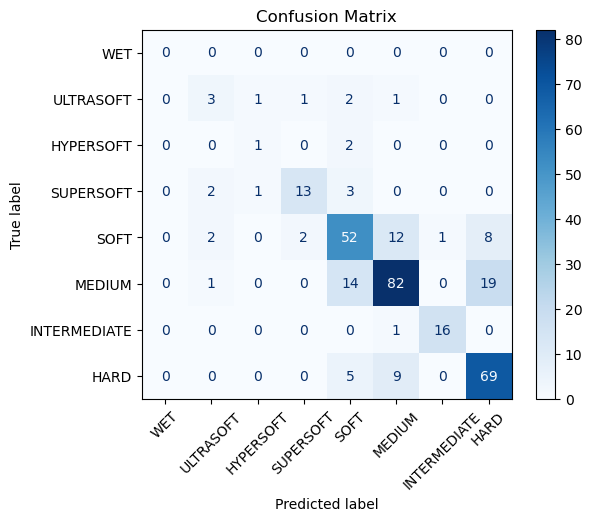

A few different models were chosen and compared for the classification/regression problems. For the regression, predicting lap time improvement for a given tire change, the naive guess (mean), linear regression, and XGBoost were implemented. XGBoost performed the best, with the hyperparameter sweep for model depth seen above. XGBoost was chosen because it is a well performing tree based model for both of these problems, which performs very well at scale and can be easily tuned to prevent overfitting. For the classification, predicting which tire will be next for the given weather conditions, I compared the naive guess of the most common label, random forest, and XGClassifier. Again, the boosted model performed the best of the three, with the highest test accuracy of 73%.

Quantitative Metrics

Regression| Model | MSE |

|---|---|

| Naive | 0.08 |

| Linear Regression | 0.078 |

| XGBoost | 0.054 |

| Model | Accuracy |

|---|---|

| Naive | 0.359 |

| Random Forest | 0.718 |

| XG Classifier | 0.730 |

Final Model Analysis

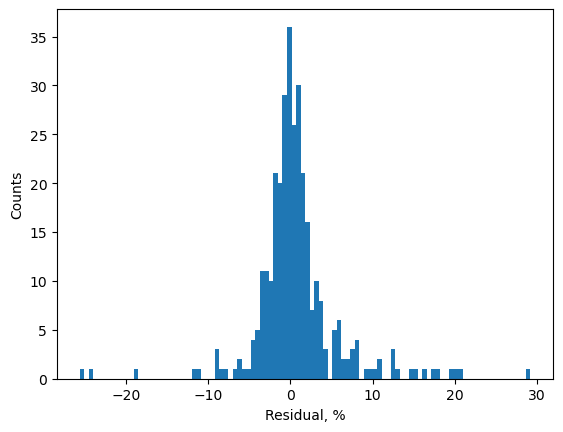

The final XGBoost model demonstrated a strong predictive foundation, with hyper-parameter tuning identifying an optimal tree depth of four levels to balance performance against overfitting. The residual analysis, however, indicates a slight negative correlation, suggesting the model systematically handles certain data points with a consistent error margin. This highlights the inherent challenge of predicting tire performance based solely on weather data, pointing to the significant impact of unmodeled variables like driver input and real-time track evolution. However, overall, the comparison of the models still demonstrates the gradient boosted forest models to perform better than simpler models, indicating nonlinearities and high gradients in the dataset that were best captured by higher fidelity models.